273 - Distributed System

Consensus

It allow a collection of machines to work as a coherent group that can survive when its members fail. It is a main building block in building reliable large-scale distributed software systems.

- Quorum Consensus algorithm

A quorum is the minimum number of votes that a distributed transaction has to obtain in order to be allowed to perform an operation in a distributed system. A quorum-based technique is implemented to enforce consistent operation in a distributed system.

Quorum-based voting for replica control

Suppose you have two nodes (A & B) storing the same set of data.

N = # of replicas (nodes)

R = # of READ nodes in quorum

W = # of WRITE nodes in quorum

If R = 1, W = 1, then R + W = N

Client can write into A and read from B (might not see its latest write): inconsistent view.

If R = 2, W = 1, then then R+W > N

Writes go to either A or B, but client must read from both A and B: consistent view.

If a given data item has a total of V votes, the quorums have to obey the following rules:

- ensures that a data item is not read and written by two transactions concurrently, Additionally, it ensures that a read quorum contains at least one site with the newest version of the data item.

- ensures that two write operations from two transactions cannot occur concurrently on the same data item. The two rules ensure that one-copy serializability is maintained.

Raft consensus algorithm

Raft is a consensus algorithm designed as an alternative to Paxos. Raft offers a generic way to distribute a state machine across a cluster of computing systems, ensuring that each node in the cluster agrees upon the same series of state transitions

Decomposition into sub components:

- Leader election : Centralized control simplifies data and task synchronization. Raft uses randomized timers to elect leaders.

- Log replication : the leader must accept log entries from clients and replicate them across the cluster, forcing the other logs to agree with its own

- Safety

Raft achieves consensus via an elected leader. A server in a raft cluster is either a leader or a follower, and can be a candidate in the precise case of an election (leader unavailable). The leader is responsible for log replication to the followers. It regularly informs the followers of its existence by sending a heartbeat message. Each follower has a timeout (typically between 150 and 300 ms) in which it expects the heartbeat from the leader. The timeout is reset on receiving the heartbeat. If no heartbeat is received, the follower changes its status to candidate and starts a leader election.

Non-Consensus Approach

- Consistency As Logical Monotonicity(CALM)

In general terms, the CALM principle says that:

monotonic -> without coordination protocols

non-monotonic -> with coordination protocols.

- logically monotonic distributed code is eventually consistent without any need for coordination protocols (distributed locks, two-phase commit, paxos, etc.)

- eventual consistency can be guaranteed in any program by protecting non-monotonic statements (“points of order”) with coordination protocols.

( a block of code is logically monotonic if it satisfies a simple property: adding things to the input can only increase the output. By contrast, non-monotonic code may need to “retract” a previous output if more is added to its input.)

- Conflict-Free Replicated Data Types (CRDT)

consistency without consensus

CRDTs are objects that can be updated without expensive synchronization/consensus and they are guaranteed to converge eventually if all concurrent updates are commutative and if all updates are executed by each replica eventually.

state-based and operation-based approach, and based on the model of replication they define two types of CRDTs, CvRDT (convergent replicated data type) and CmRDT ( commutative replicated data type).

- ACID 2.0

- Associative

- Commutative

- Idempotent

- Distributed

Bloom filter

A Bloom filter is a space-efficient probabilistic data structure, that is used to test whether an element is a member of a set. It tells us whether a search key is definitely not in the set or may be in the set.

Two Bloom Filters operations:

- The ability to add an extra object ‘x’ to the set ‘S’.

- To determine whether a given object ‘x’ is a member of set ‘S’.

空間效率和查詢時間都遠遠超過一般的演算法,缺點是有一定的誤識別率和刪除困難。

While risking false positives, Bloom filters have a strong space advantage over other data structures for representing sets.

Bloom filters also have the unusual property that the time needed either to add items or to check whether an item is in the set is a fixed constant, , completely independent of the number of items already in the set

Gossip Protocol

3 Stages in Gossip:

- Susceptible: Node “n” has not yet received an update.

- Infective: Node “n” has received an update and is now ready to forward the update.

- Removed: Node “n” has the update but is no longer willing to share it.

2-Style of Epidemic Protocols:

Anti-entropy

- Each Node “n” periodically contacts a random peer “p” selected from the pool.

- Then, n-p engages in an information exchange protocol: n to p (push), n from p (pull), or both push-pull.

Rumor mongering (or Dissemination protocols)

- Nodes are initially ignorant.

- When an update is received by a node, it becomes a hot rumor.

- While a node holds a hot rumor, it periodically chooses a random peer from the pool and sends (push) the rumor to it.

- Eventually, a node will lose interest in spreading the rumor.

Each node maintains a small local membership table that provides a partial view on the complete set of nodes, and periodically refreshes the table using a gossiping procedure.

Performance

Capacity: Amount of resource

Utilization: % of capacity using

Processing Time: Time for a request to process.

Latency: Any wait time to start processing the request including network transfer.

Throughput: request/second

- Threads

⋅⋅ General-purpose solution for managing concurrency

⋅⋅ Multiple independent execution streams.

⋅⋅ Shared state.

⋅⋅ Pre-emptive scheduling

⋅⋅ Synchronization via Locks, Semaphore, Conditions.

Usage

OS: one kernel thread for each user process •CPU-bound Apps: one kernel thread per CPU (E.g. Scientific applications) •Distributed Systems: process requests concurrently (overlap I/Os) •GUIs: threads correspond to user actions

Problem

Synchronization : Must coordinate access to shared resources with locks. Forget to hold a lock? Corrupted data.

Deadlock: Circular dependencies among locks. Each process waits for some other process: system hangs!

Callbacks don’t work with locks.

- Events

⋅⋅ One execution stream: no CPU concurrency

⋅⋅ Register interest in event callbacks.

⋅⋅ Event loop (scheduler) waits for events, invokes handlers.

⋅⋅ NO preemption of event handlers.

⋅⋅ Handlers generally short-lived.

Usage

GUIs or Browser’s HTML DOM: One handler for each event: press button, right-click on mouse) Handler implements behavior (delete, undo files, etc.) •Distributed Systems: One handler for each source of input (socket) Handler processes incoming request, sends response. Event-driven I/O for I/O even loop.

Problem

Long-running handlers make application non-responsive.

Cannot maintain local state across events because handler must return.

No CPU concurrency

Event-driven I/O not always well supported (e.g. poor write buffering)

- Threads vs. Events

Events avoid concurrency as mush as possible, thread embrace

Debugging easier with events.

Application logic flow are typically sequential, so it’s harder to code in event-driven style.

Events are faster than threads on single CPU : no locks, not context-switching

Threads provide true concurrency

Use events for GUIs, distributed systems.

Only use threads where true CPU concurrency is needed.

- scale up: make faster for use experience, re-factor code

- scale out: optimizing for more hundreds users

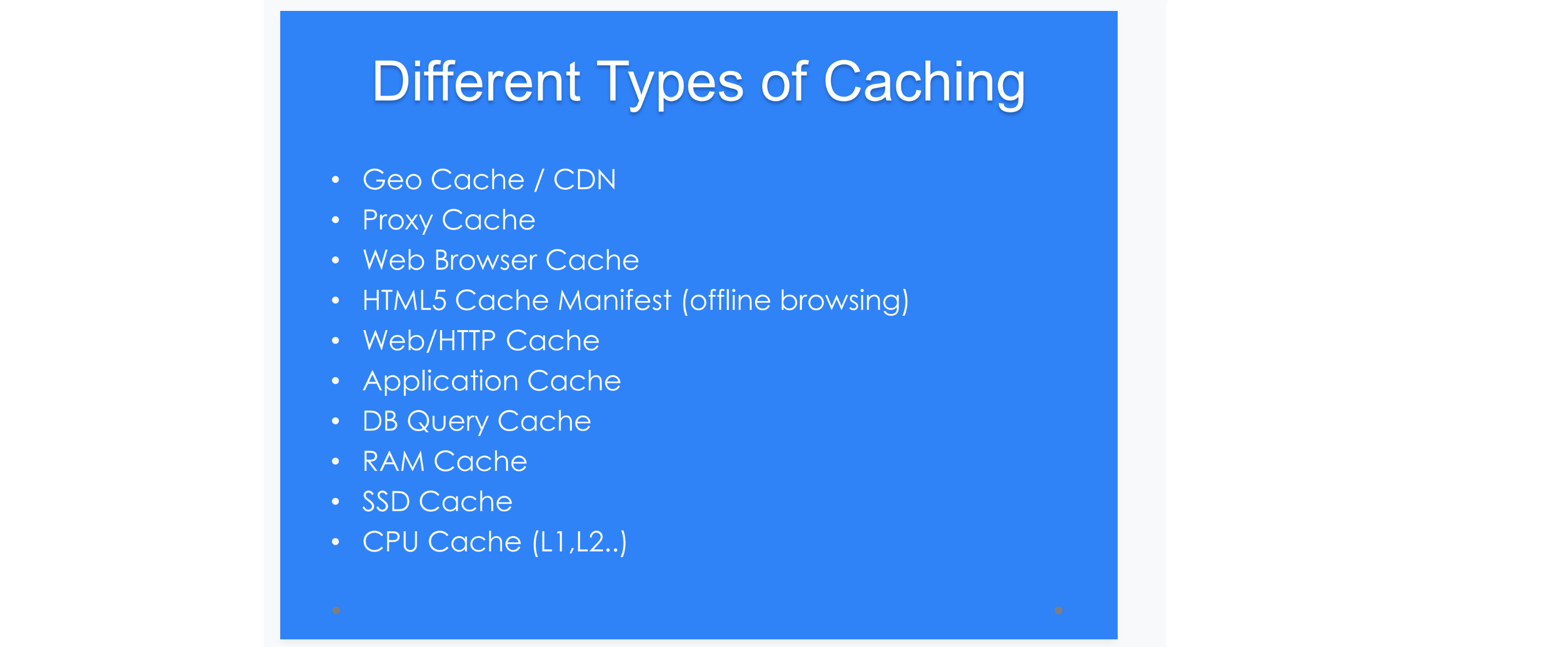

application caching, replication cache, update database and local data at the same time

application cache above is for content, down is for read only / hot data

Parallel Programming

Programming model :

- shared memory

Multiple threads communicating through memory, so it need locking and synchronization to keep atomicity. Many scalability issues -- deadlock, starvation, race condition

- distributed memory

Data distribution and communication orchestration is essential for performance.

- Messaging passing

Process communicate by explicit send-receive pairs

| Advantage of Share memory | Advantage of Message Passing |

|---|---|

| Implicit communication | Explicit communication (send/receive) |

| Low overhead when cached | Easier to control data placement |

| Disadvantages of Shared Memory | Disadvantages of Message Passing |

|---|---|

| Complex to build scalable system | Message passing overhead can be quite high. |

| Requires synchronization | More complex to program |

| Hard to control data placement within caching system | Introduces question of reception technique |

- Single Program Multiple Data pattern (SPMD):

the same program runs against multiple data on each processor or node.

= initialize -> split data correctly and evenly -> run the same program -> combine the results -> finalize

X dynamic load balancing

- Master-worker pattern:

Particularly relevant for problems using task parallelism pattern where task have no dependencies

Main challenge is determining when the entire problem is complete, because you cannot know the finishing time of each tasks

- Future/Defer

FutureTask can be used to load lengthy computation that can be started before the results are needed, like loading info.

- Fork/Join pattern:

Supports divide-and-conquer pattern. using the work-stealing

- Parallel Array - Fine-grained Algorithm

Parallelize large data set by recursively break it into smaller data sets.

How to choose a design pattern that facilitates the mapping of tasks to units of execution?

- Organize by tasks 2. Organize by data decomposition 3. Organize by flow of data

4 steps to crate a parallel program

decomposition -> assignment -> orchestration -> mapping

- Data Parallelism

Array data structure – by rows, columns or blocks

Recursive data structure – trees into sub-trees

- Task Parallelism

Each execution process (thread) performs different functions on same or different data.

- Pipeline Parallelism

Each task will do the job while the previous one finishing. Bandwidth up! But still the same latency

Amdahl's law

A program can be sped up by adding computing resources, based on proportion of serial and parallelizable components.

p = fraction of work that can be parallelized 1-p = the remaining work that cannot be parallelized

n = the number of processor

1/ ((1-p) + (p/n))

speed up = old running time / new running time

3 Keys to Parallel performance

- Coverage of parallelism in algorithm : Amadahl's law

- Granularity of partitioning among CPUs : load balance, communication cost

- Locality of computation and communication : Communication between CPUs or between CPUs and their memories.

Single Thread Performance

- Load balance the work among processors. 2. Make execution on each processor faster

Performance Consideration

For computation-intensive (CPU-bound) tasks that do no I/O and access no shared data,

◦ N cpu or N cpu + 1 threads -> optimal throughput, because More threads can degrade performance as the threads compete for CPU and memory resources.

Load balancing factors

- Tasks costs(load balance) 2. Tasks dependency 3. locality

MERKLE TREES

- Implemented based on Binary Trees.

- Hash the data and then stores the data hash in the leaf nodes only.

- Each node has up to 2 children.

- Space efficient as it stores hashes instead of full content of the files.

- Uses for data verification in distributed systems: P2P networks, Bitcoin, Git.

- Bitcoin uses it as a digital fingerprint of the entire set of transactions.

If there is an odd number of transactions, the last transaction hash will be duplicated to create an even number of leaf nodes.

Mining

Mining is the mechanism that allows the blockchain to be a decencentralized security. The main job is verifing the legitimacy of a transaction

Proof-Of-Work: Miners compete to solve a difficult mathematical problem based on a cryptographic hash algorithm. The solution found is called the Proof-Of-Work

Proof of Stake: a person can mine or validate block transactions according to how many coins he or she holds. This means that the more Bitcoin or altcoin owned by a miner, the more mining power he or she has.

Zero Knowledge Proof : One party can approve to another party without conveying any other information.

- Compleness : If the statement is true, the honest verifier will be convinced of the fact by an honest prover.

- Soundness : If the statement is false, no cheating prover can convince the honest verifier that it is true, except with some small probability.

- Zero-knowledge : Just knowing the statement, not the secret, is sufficient to show that the prover knows the secret.

BlockChain

Digital currency and online payment system that uses encryption techniques to

- Regulate the generation of units of currency

- Verify the transfer of funds

- Decentralized virtual currency

- Mining, anyone can use their machines to verify and record payments into the public ledger.

Ownership of bitcoin is established through private and public keys, wallet with a bitcoin address.

Mining:

It's an operation of inverse hashing to determine a nonce.

miners complete to solve a difficult mathematical problem based on cryptographic hash algorithm (known as Proof-of-Work).

The competition to solve the PoW algorithm is to earn the reward and the right to record transactions on the blockchain.

Block chain smart contract: piece of code that lives on a blockchain, not a server. When a pre-programmed condition is triggered, the smart contract executes the corresponding contractual clause.

Replication

- To keep data geographically close to users.

- To allow the system to continue working even if some parts of the system have failed.

- To scale out the number of nodes that can serve read queries.

Leader-based Replication

client --> read/write --> Leader ----[write]----> Followers ---> Leader -----[response] --> client

Hashing

Hashing is a process that maps data of a variable length to data of a fixed length.

A common way of load balancing across n cache nodes is to put object o in cache node number hash(o) mod n.

=> disadvantage: add or remove from cache nodes, every object needs to hash to a new location

Consistent Hashing (CH)

Hash both objects and caches using the same hash function to map the cache to an interval.

When the cache is removed, its interval is taken over by a cache with an adjacent interval while the other caches remain unchanged, only K/n keys need to be remapped on average, where K is the number of keys, and n is the number of slots.

Rendezvous(HRW) Hashing

Rendezvous hashing uses Highest Random Weight (HRW) to distribute objects uniformly over all nodes.

When a node is removed, only the keys mapped to that node need to be remapped .